The model must provide accountability

A key point with every machine learning model is its transparency and explainability. Particularly when it comes to use in government agency applications, it was important to be able to comprehend and verify how the model was created. The question of accountability, in other words how precise and trustworthy the model is, was addressed in the next step by fortiss and IBM. In line with the motto “trust but verify”, an accountability framework was designed that enables the model to be tested with respect to reproducibility and potential errors that could occur during training. The system must also ascertain if a bias exists, whether in terms of the gender, ancestry or socio-economic status of the citizen, as well as determine if the model is fair or was manipulated in some way.

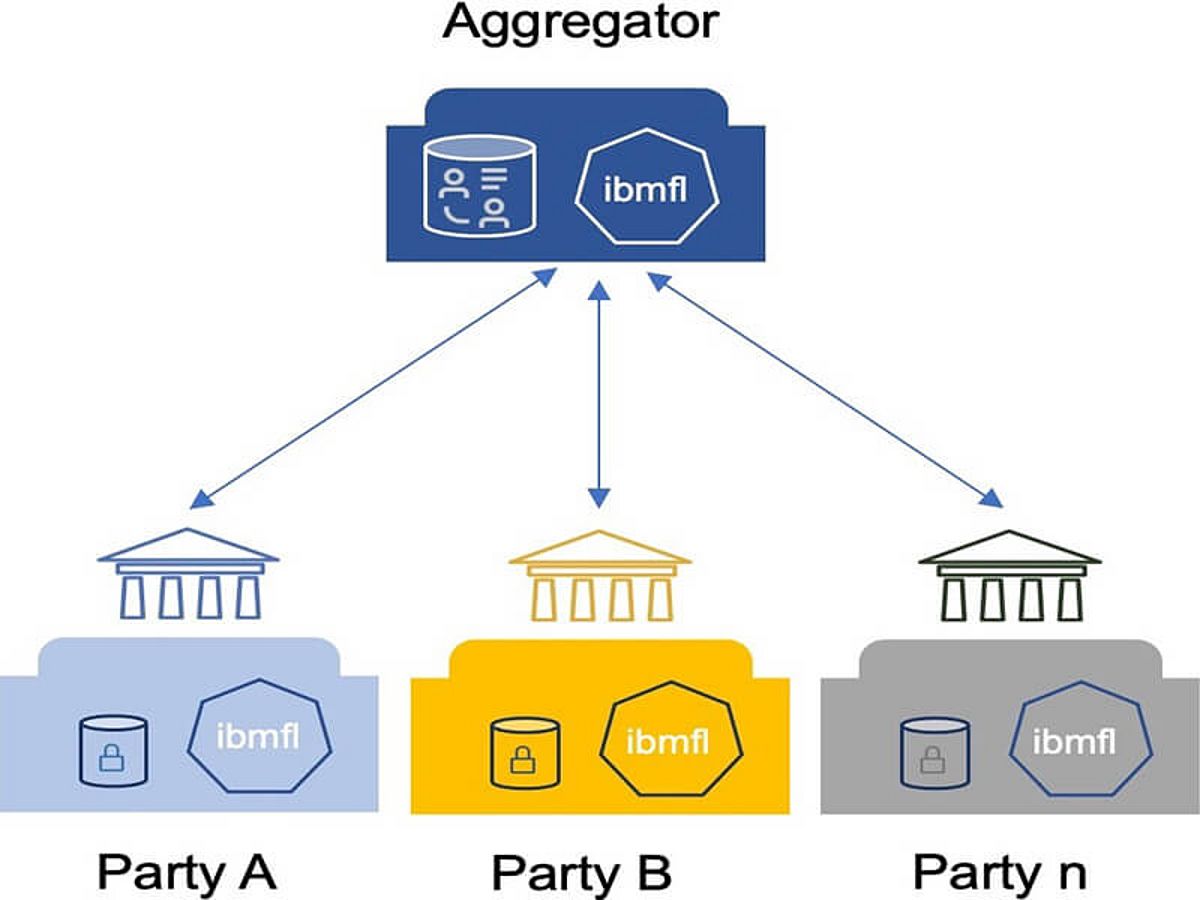

Federated machine learning models are difficult to verify since they don’t have the raw data. Federated learning systems can be trusted in theory, but to provide accountability through the special model, IBM and fortiss employed diverse claims by means of the Evidentia technology. The claims are based on various model points, such as the training process workflow or the processed data. Verification of these claims is the accountability, which third parties such as auditors can also access.