Towards quantifiably safe autonomous dynamic systems

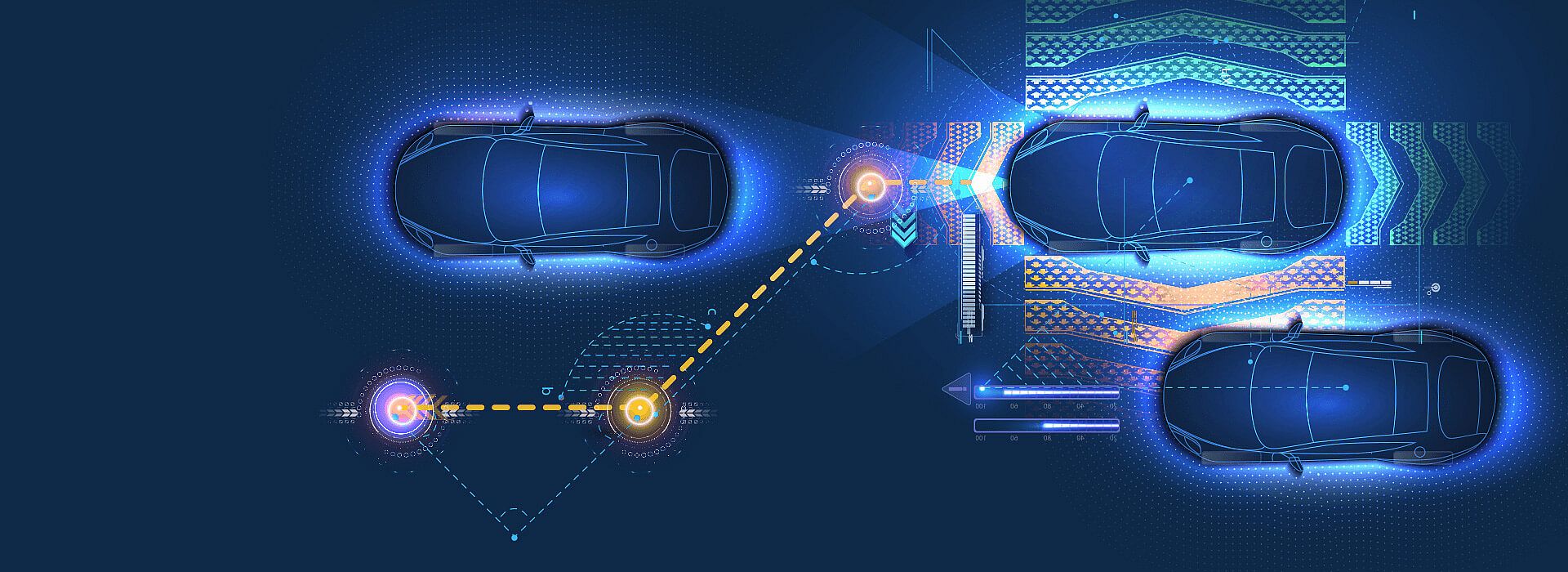

Autonomous systems must solve tasks in highly dynamic environments, e.g in autonomous driving or drone delivery servives. Thereby, they are confronted with environmental uncertainties arising from inaccurate perception, prediction and dynamic models of the environment. Further forms of uncertainty are introduced when implementing such systems based on learning-based and classical AI technology . These combination of uncertainties make it har to how to derive a quantifiably argumentation of safety for such systems. The project MUKI investigates how to combine runtime and offline verification techniques under a common framework to achieve a quantifiable safety argument under the presribed aggregation of uncertainties.

A central research question at the interfae between classical safety engineering and development of autonomous systems is as follows: How must an architecture for autonomous systems be developed to allow for a quantifiable argumentation of safety given uncertainties of environment and AI? Research of fortiss in this area serves to refine and detail first standards extending classical safety argumentation to be applied to AI-based autonomous systems.

Research of fortiss contributes in the following areas:

01.02.2021 – 31.12.2021